OpenAI GPT-2: An Almost Too Good Text Generator! | Summary and Q&A

TL;DR

OpenAI has developed GPT-2, an AI algorithm that can read and comprehend text, answer questions, complete text, and perform other natural language processing tasks with minimal supervision.

Key Insights

- ✊ GPT-2 showcases the power of leveraging large amounts of data and compute power to achieve impressive results in natural language processing tasks.

- 🎭 By minimizing the need for additional knowledge insertion, GPT-2 becomes a versatile, general algorithm capable of performing various tasks.

- ✊ Not all learning techniques scale well with increased compute power, making GPT-2's scalability an important accomplishment.

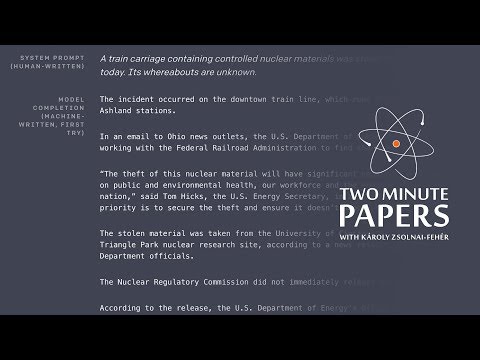

- 👏 The question of whether to release the full GPT-2 model raises ethical concerns, as it has the potential for both positive and nefarious uses.

- 👨💻 Balancing the need for publishing papers and source code with ethical considerations requires further discussion in a conference-style meeting.

- 🖇️ The paper and additional reading materials linked in the video description provide more in-depth information on GPT-2 and its implications.

- 👻 The late release of this video allowed for a more informed discussion after intense debates surrounding the publication of the GPT-2 algorithm.

Transcript

Dear Fellow Scholars, this is Two Minute Papers with Károly Zsolnai-Fehér. This is an incredible paper from OpenAI, in which the goal is to teach an AI to read a piece of text and perform common natural language processing operations on it, for instance, answering questions, completing text, reading comprehension, summarization, and more. And not o... Read More

Questions & Answers

Q: How does GPT-2 learn to read and understand text without supervision?

GPT-2 learns through unsupervised learning, where it reads and analyzes a vast amount of text data from the internet, gradually understanding the patterns and structure of language.

Q: How well does GPT-2 perform on text completion tasks?

GPT-2 can complete text, as shown by its continuation of a fictional story about unicorns speaking perfect English. While not perfect, the algorithm achieved impressive results after several attempts.

Q: Can GPT-2 answer questions competently?

Yes, GPT-2 can answer questions effectively. It can comprehend and respond to queries based on the given text, showcasing its ability to understand and process information.

Q: How does GPT-2 compare to human reading comprehension?

Although GPT-2 achieves state-of-the-art results in language modeling, it may not match human-level reading comprehension. While it performs well, there is still room for improvement in this aspect.

Summary & Key Takeaways

-

OpenAI's GPT-2 algorithm is trained to read and understand text, and it can perform various natural language processing tasks.

-

The algorithm read 40 gigabytes of internet text, learning the intricacies of language by itself.

-

GPT-2 can complete text and answer questions, showing impressive results, although it may not match human-level comprehension.

Share This Summary 📚

Explore More Summaries from Two Minute Papers 📚